Horaud, Radu (19..-.... ; informaticien)

Radu Patrice Horaud holds a position of research director at INRIA Grenoble Rhône-Alpes. He is the founder and leader of the PERCEPTION team. Radu’s research interests cover computational vision, audio signal processing, audio-visual scene analysis, machine learning, and robotics. He has authored over 160 scientific publications.

Radu has pioneered work in computer vision using range data (or depth images) and has developed a number of principles and methods at the cross-roads of computer vision and robotics. In 2006, he started to develop audio-visual fusion and recognition techniques in conjunction with human-robot interaction.

Radu Horaud was the scientific coordinator of the European Marie Curie network VISIONTRAIN (2005-2009), STREP projects POP (2006-2008) and HUMAVIPS (2010-2013), and the principal investigator of a collaborative project between INRIA and Samsung’s Advanced Institute of Technology (SAIT) on computer vision algorithms for 3D television (2010-2013). In 2013 he was awarded an ERC Advanced Grant for his five year project VHIA (2014-2019).

Vidéos

Robot heads and acoustic laboratories

Part 2 : Methodological Foundations 2.1. Robot heads and acoustic laboratories 2.2. Binaural Processing Pipeline 2.3. Continuous-time Fourier transform 2.4. Continuous short-time Fourier transform 2.5

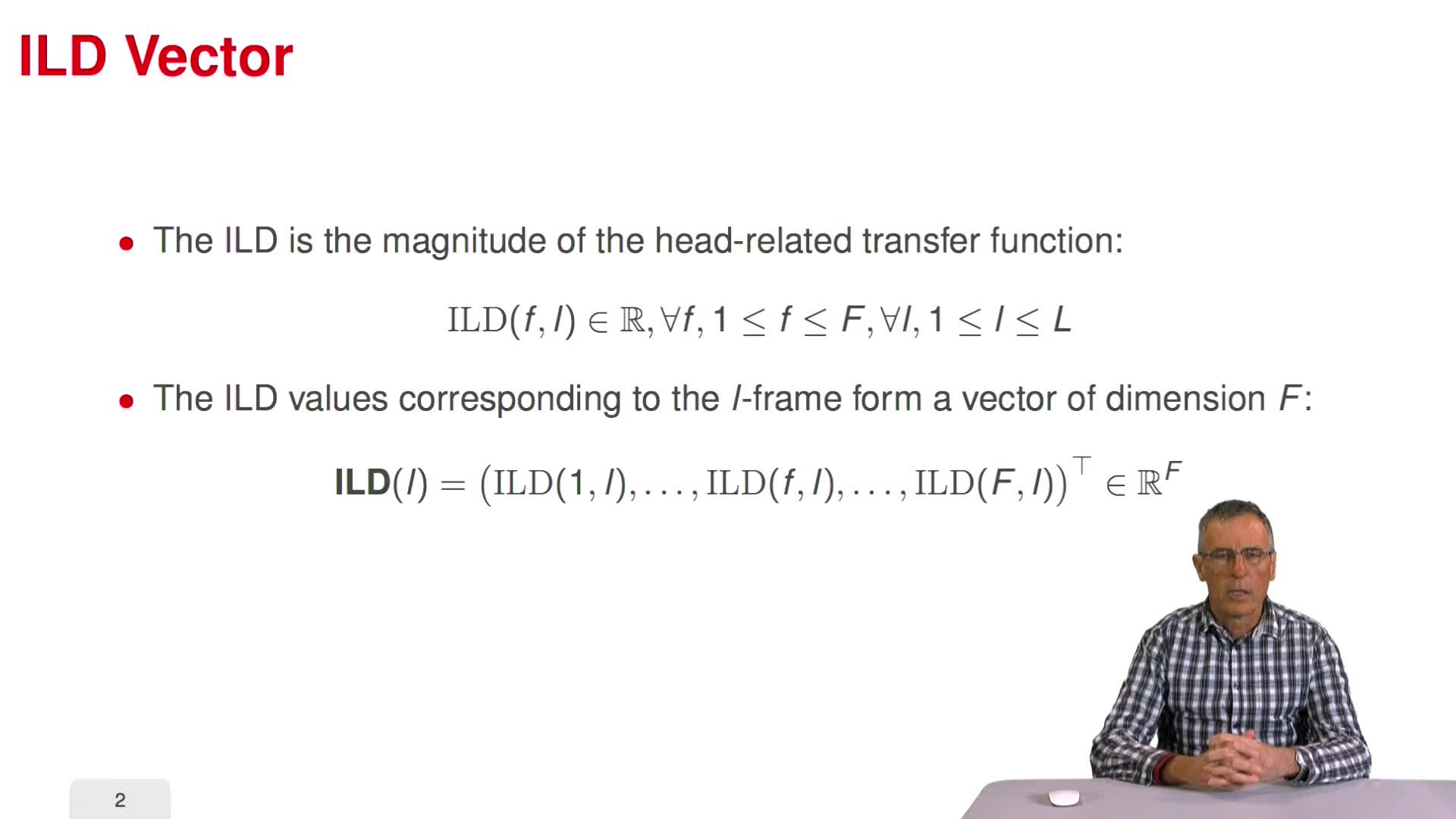

Binaural features

Part 2 : Methodological Foundations 2.1. Robot heads and acoustic laboratories 2.2. Binaural Processing Pipeline 2.3. Continuous-time Fourier transform 2.4. Continuous short-time

Embedding the microphones in a robot head

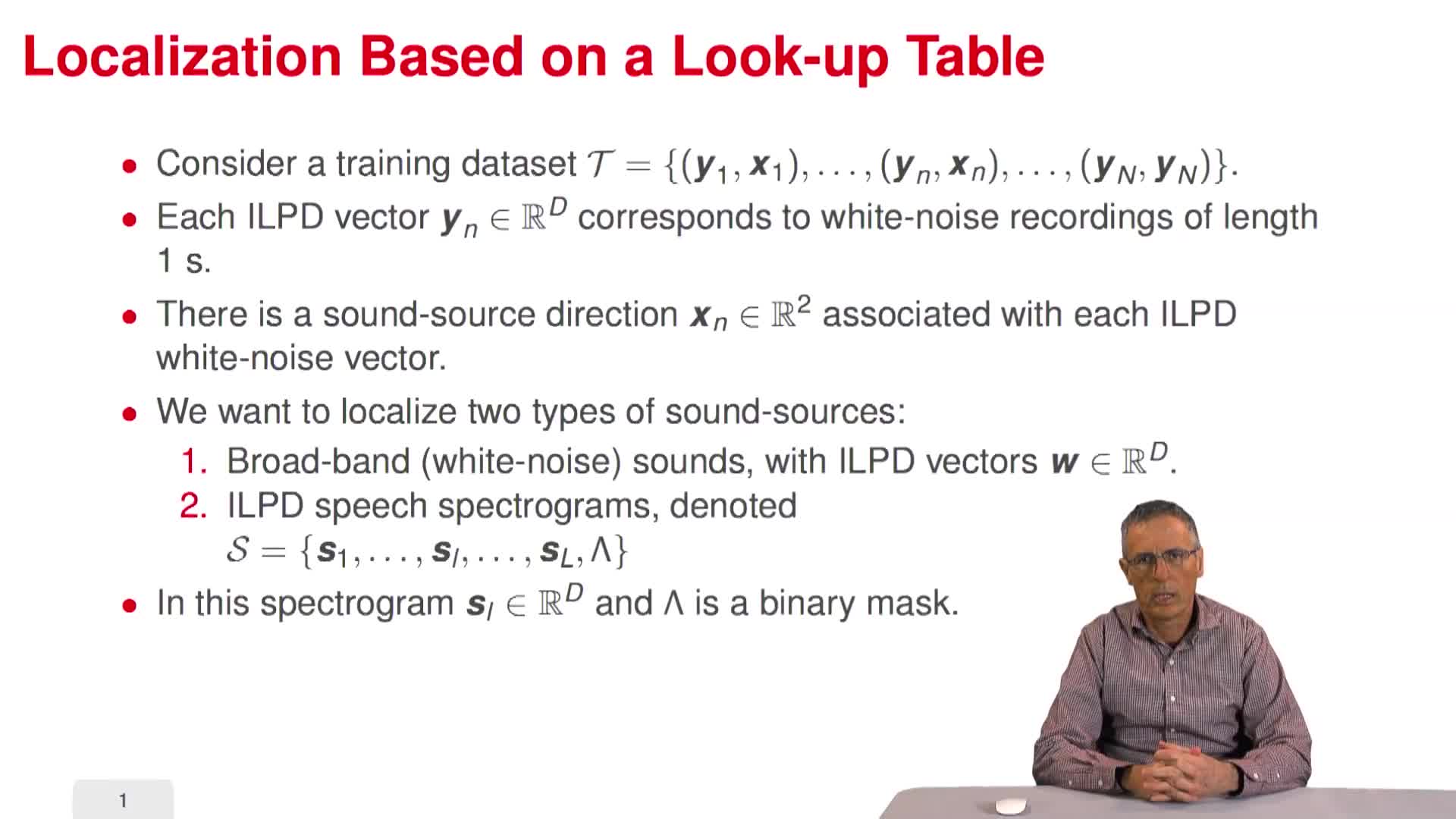

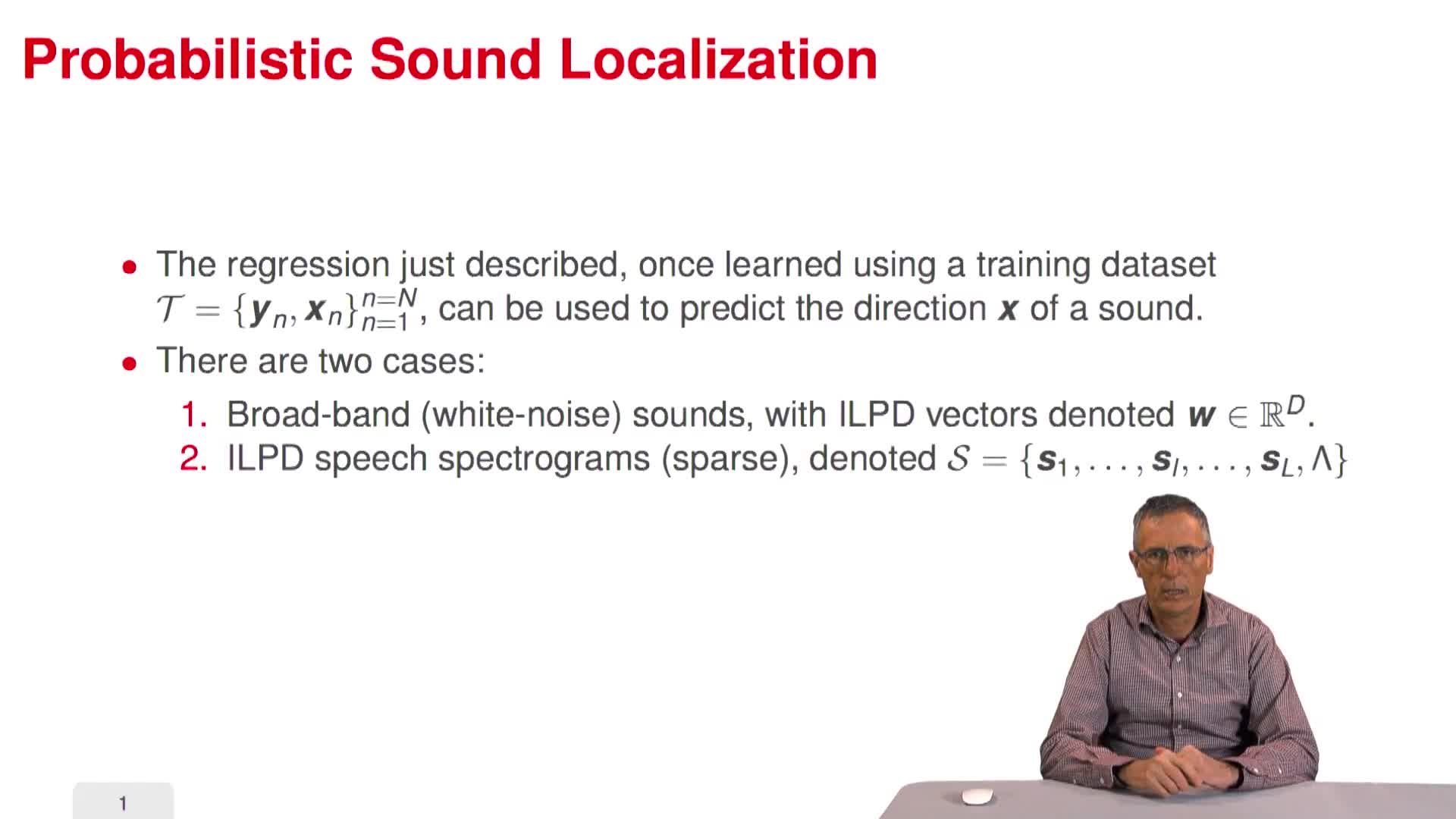

Part 3 : Sound-Source Localization 3.1. Time difference of arrival (TDOA) 3.2. Estimation of TDOA by cross-correlation 3.3. Estimation of TDOA in the spectral domain 3.4. The geometry of two

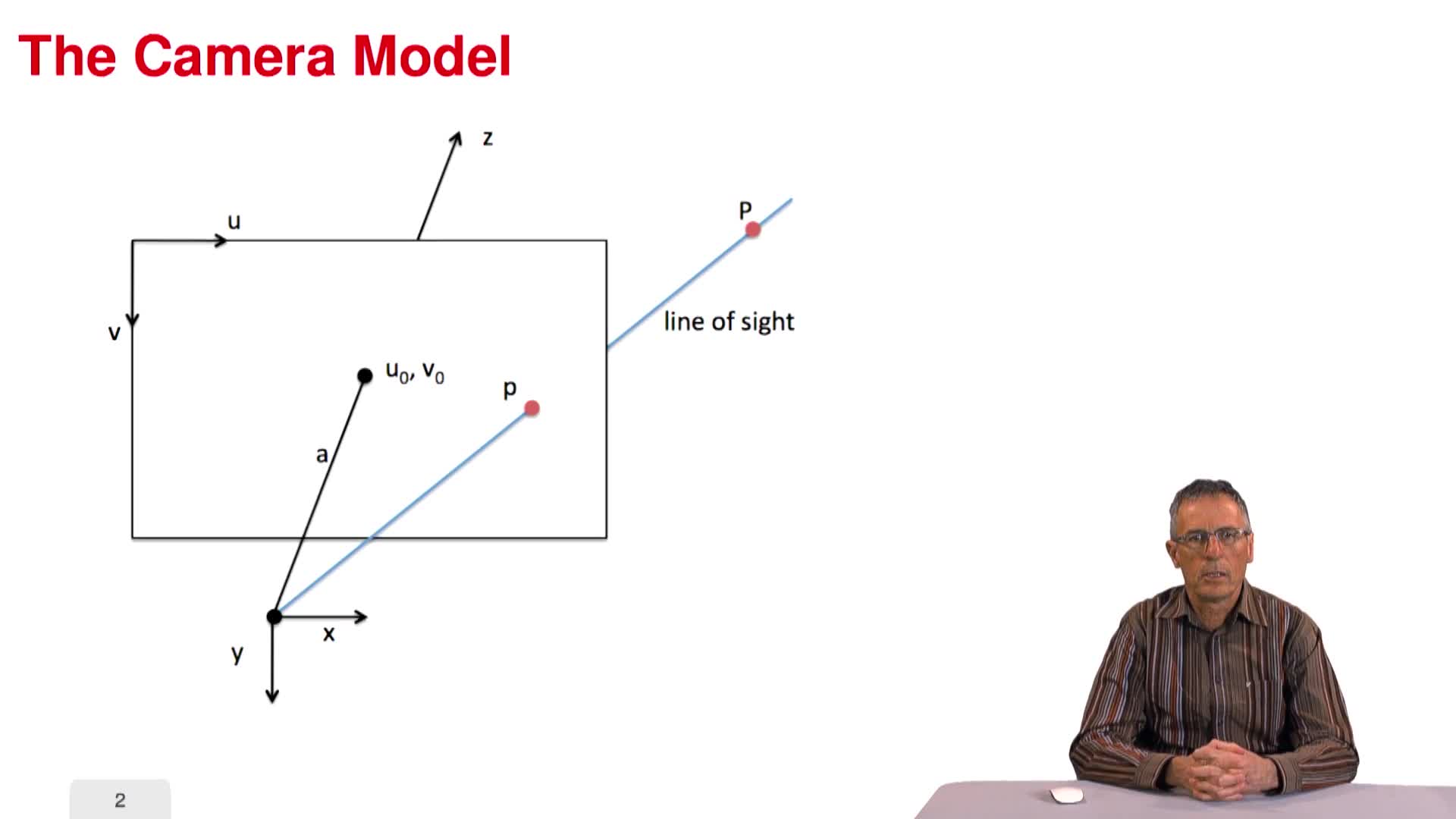

The geometry of vision

Part 5 : Fusion of Audio and Vision 5.1. Audio-visual processing challenges 5.2. Representation of visual information 5.3. The geometry of vision 5.4. Audio-visual feature association 5.5. Audio

Auditory scene analysis

Part 1 : Introduction to Robot Hearing 1.1. Why do robots need to hear? 1.2. Human-robot interaction 1.3. Auditory scene analysis 1.4. Audio signal processing in brief 1.5.

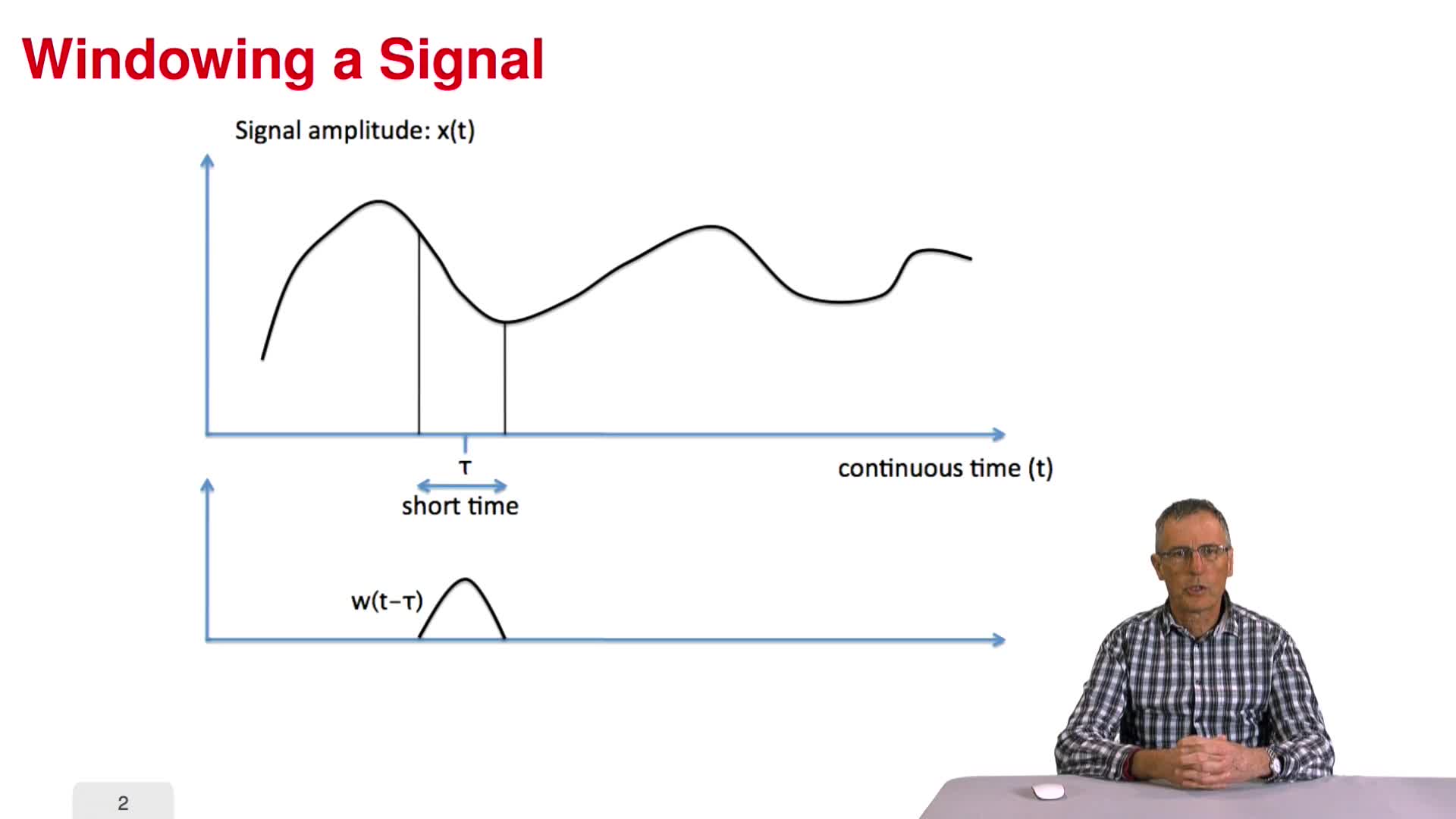

Continuous short-time Fourier transform

Part 2 : Methodological Foundations 2.1. Robot heads and acoustic laboratories 2.2. Binaural Processing Pipeline 2.3. Continuous-time Fourier transform 2.4. Continuous short-time

Estimation of TDOA by cross-correlation

Part 3 : Sound-Source Localization 3.1. Time difference of arrival (TDOA) 3.2. Estimation of TDOA by cross-correlation 3.3. Estimation of TDOA in the spectral domain 3.4. The geometry of two

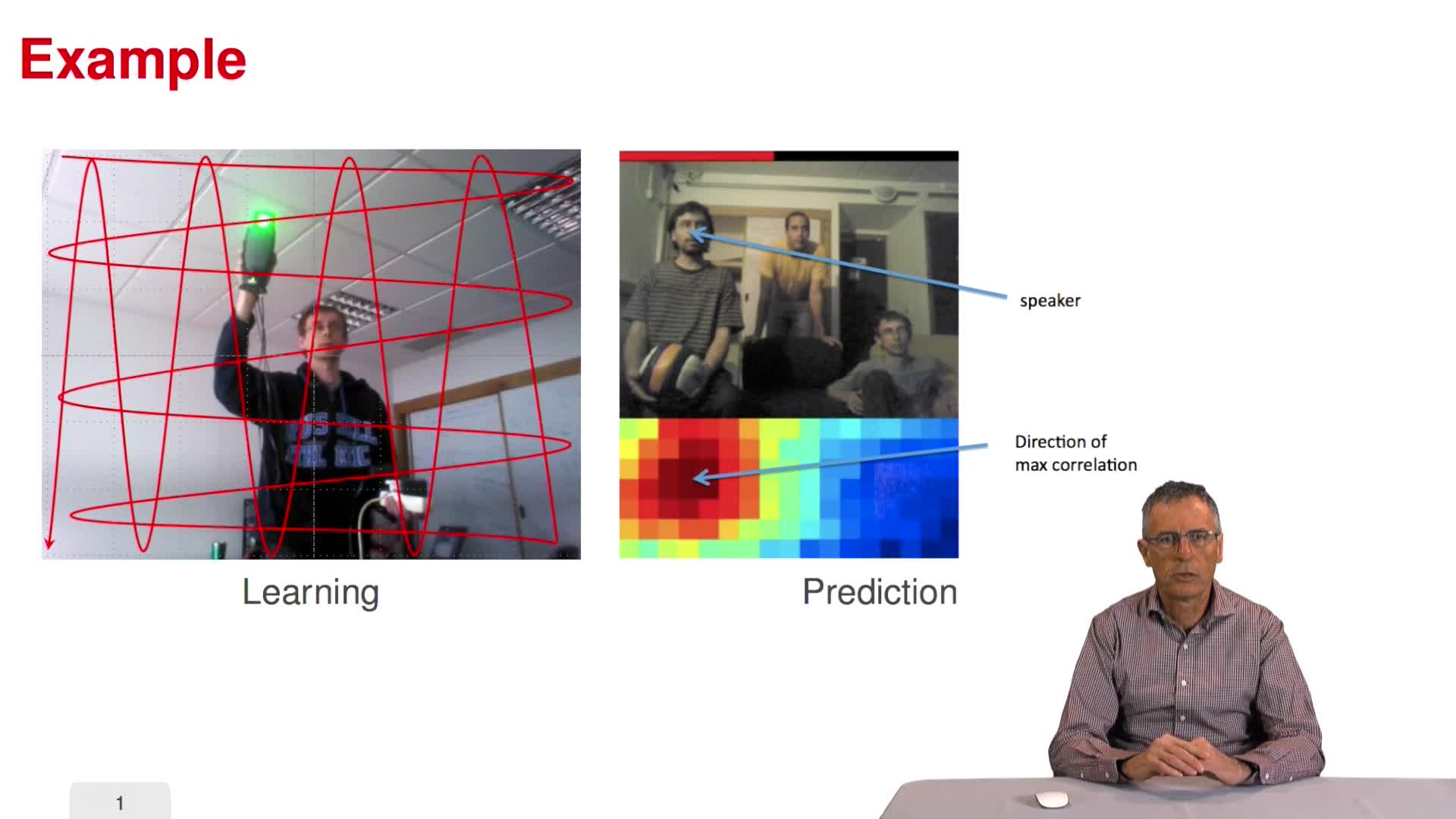

Example of sound direction estimation

Part 3 : Sound-Source Localization 3.1. Time difference of arrival (TDOA) 3.2. Estimation of TDOA by cross-correlation 3.3. Estimation of TDOA in the spectral domain 3.4. The geometry of two

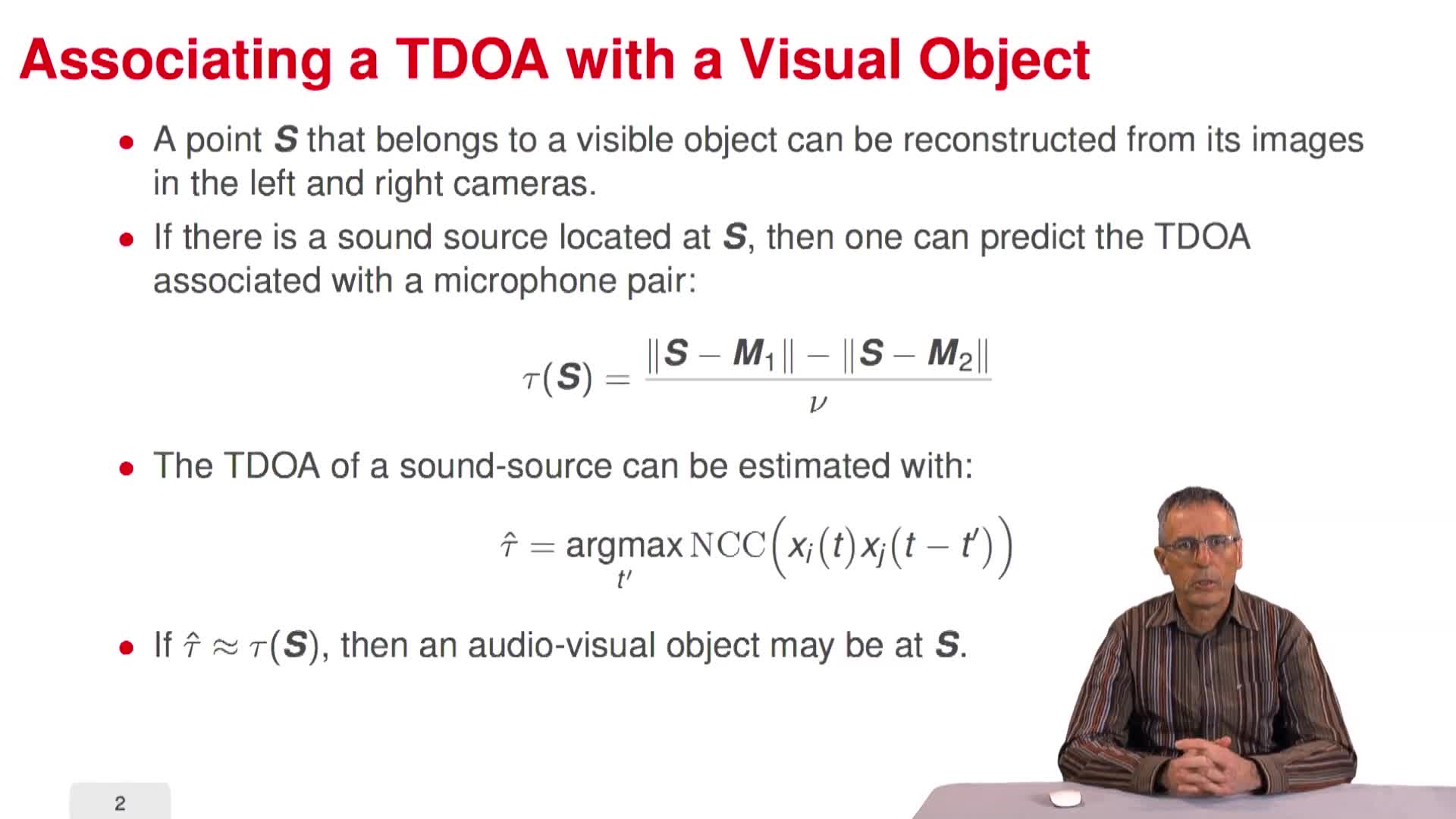

Visually-guided audio localization

Part 5 : Fusion of Audio and Vision 5.1. Audio-visual processing challenges 5.2. Representation of visual information 5.3. The geometry of vision 5.4. Audio-visual feature association 5.5. Audio

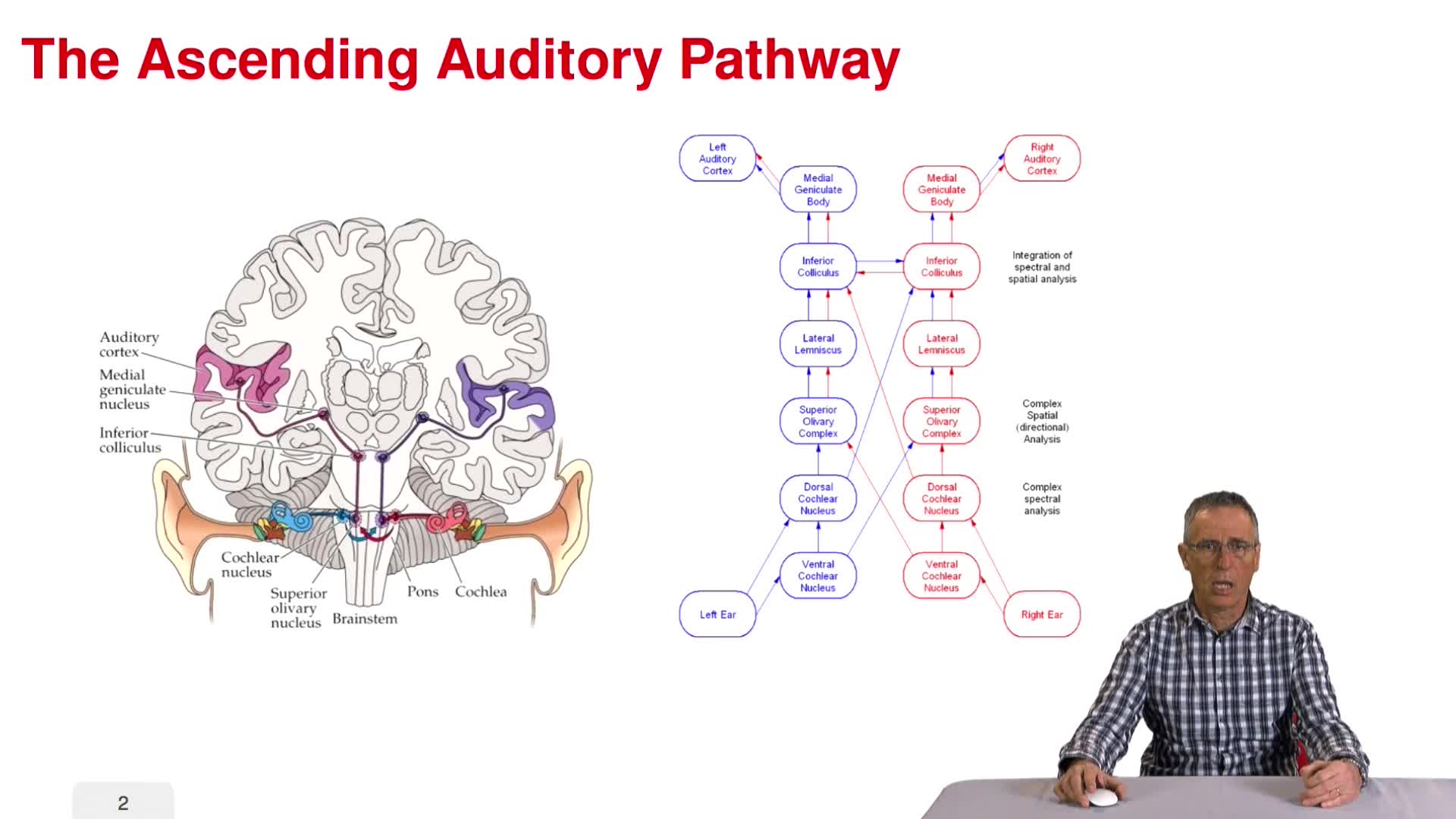

Audio processing in the midbrain

Part 1 : Introduction to Robot Hearing 1.1. Why do robots need to hear? 1.2. Human-robot interaction 1.3. Auditory scene analysis 1.4. Audio signal processing in brief 1.5.