Notice

Multimodality and Memory: Outlining Interface Topics in Multimodal Natural Language Processing

- document 1 document 2 document 3

- niveau 1 niveau 2 niveau 3

Descriptif

Multimodal dialogue, the use of speech and non-speech signals, is the basic form of interaction. As such, it is couched in the basic interaction mechanism of grounding and repair. This apparently straightforward view already has a couple of repercussions: firstly, non-speech gestures need representations that are subject to parallelism constraints for clarification requests known from verbal expressions. Secondly, co-activity between speaker and addressee on some channel is the rule virtually for the whole timecourse of interaction and leads to multimodal overlap as a norm, thereby questioning the orthodox notion of sequential turns. Thirdly, if turns are difficult to maintain, a new form of interaction has to be given: we propose to think of it in terms of polyphonic interaction inspired by (classical) music. The multimodal speech and non-speech examples given throughout the talk all seem to be explainable only when considering at least the interaction of contents and dialogue semantics, working memory constraints, and attentional mechanisms, and hence are examples of interface topics for cognitive science.

Thème

Dans la même collection

-

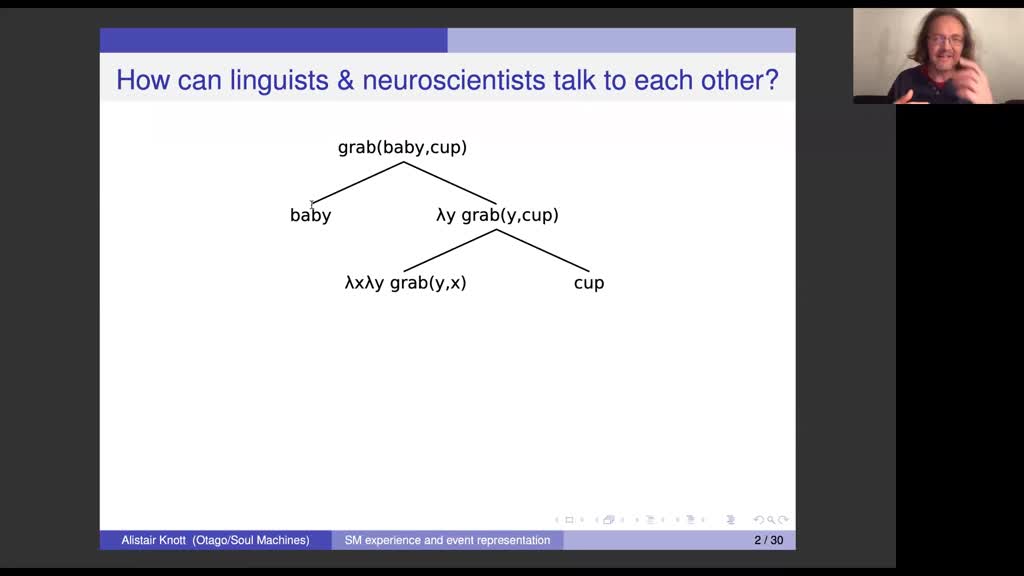

A neural model of sensorimotor experience, and of the representation, storage and communication of …

Many cognitive scientists have advanced ‘embodied’ models of human language, in which language is connected in some way to the sensorimotor (SM) mechanisms that engage with the world. I’ll introduce a

-

Dialogue Context in Memory

Recent years have seen the emergence of theories that can be used to analyze a variety of phenomena characteristic of conversational interaction, including non-sentential utterances, manual

-

How Do Pre- and Post-Encoding Processes Affect Episodic Memory?

What post-encoding processes cause forgetting? For decades there had been controversy as to whether forgetting is caused by decay over time or by interference from irrelevant information, and a

-

How prosody helps infants and children to break into communication

GervainJuditThe talk will present four sets of studies with young infants and children to show who prosody helps them learn about different aspects of language, from learning basic word order through

-

Episodic memory and the importance of attribution processes to assess the retrieved memory contents

The Integrative Memory model describes the core mechanisms leading to recollection (i.e., to recall qualitative details about a past event) and familiarity (i.e., to identify some event as

-

The neuropragmatics of dialogue and discourse

In real life communication, language is usually used for more than the exchange of propositional content. Speakers and listeners want to get things done by their exchange of linguistic utterances.

-

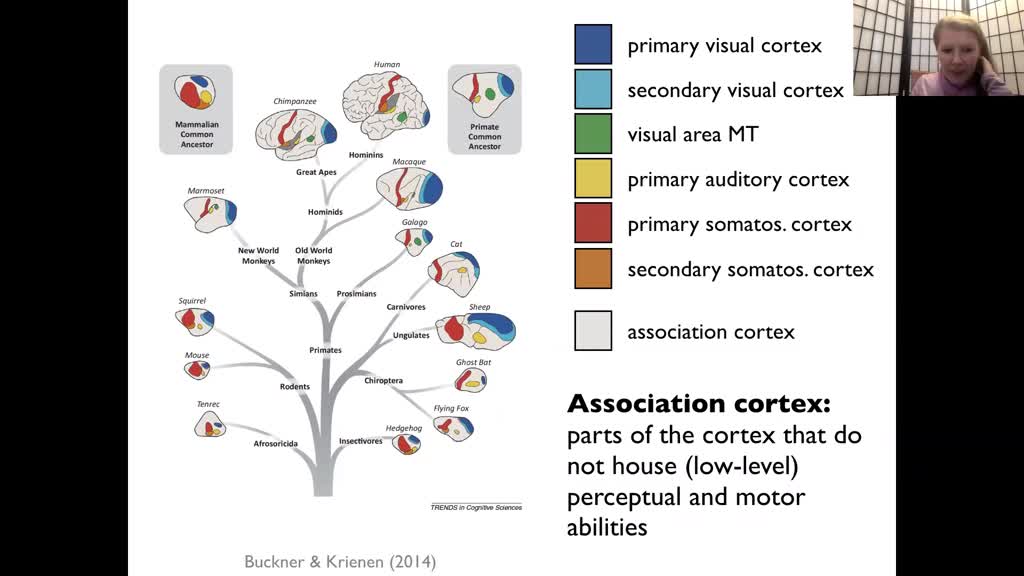

Language within the mosaic of social cognition

In spite of high genetic overlap and broadly similar neural organization between humans and non-human primates, humans surpass all other species in their abilities to solve novel problems, in the

-

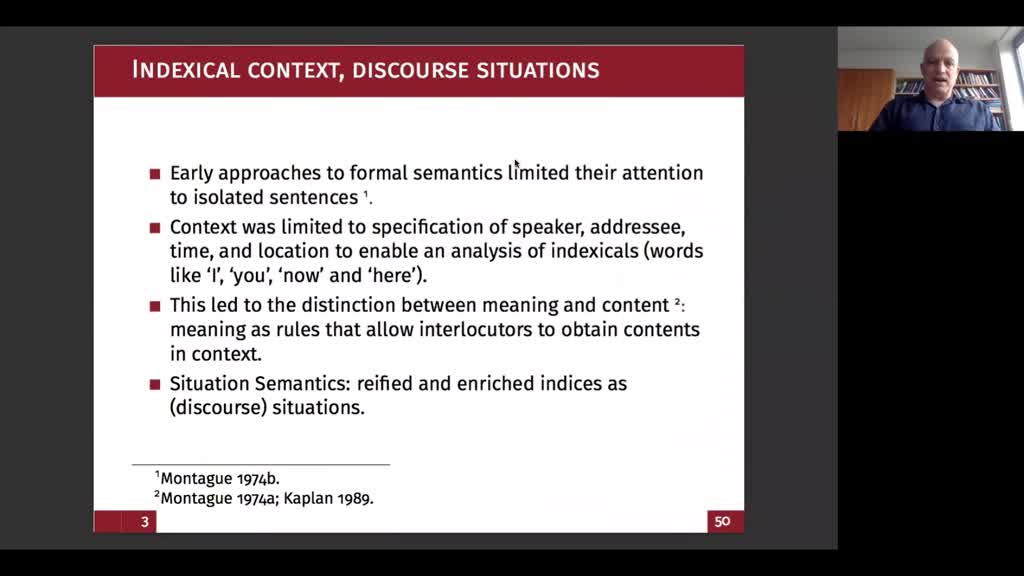

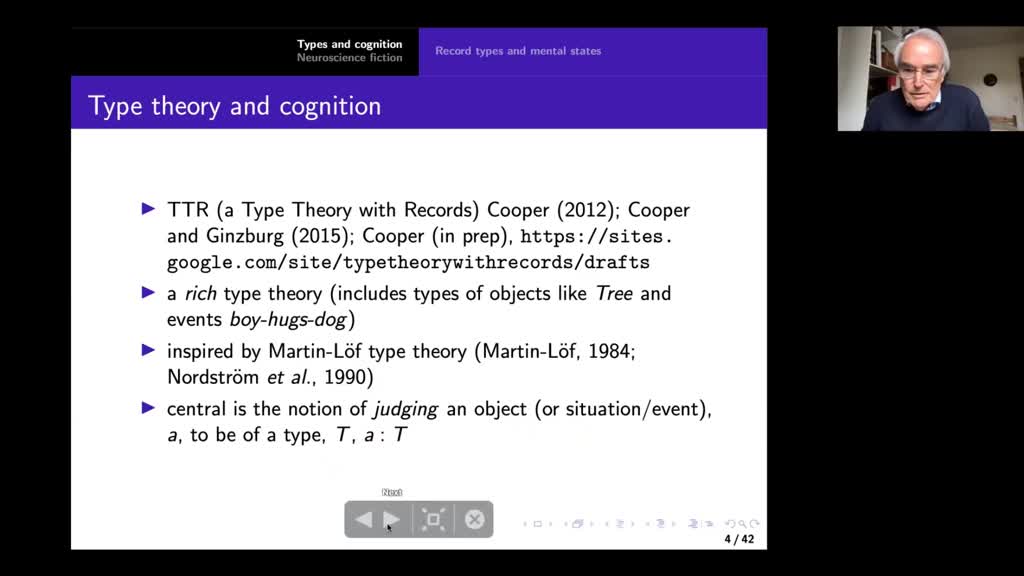

Modelling Memory with Types: semantics and neural representation

I will argue that record types in TTR (a type theory with records) can be used to model mental states such as memory or belief. For example, a type modelling a belief or memory state is a type of

-

Universal Anaphora and Dialogue Phenomena

Universal Anaphora and Dialogue Phenomena

Sur le même thème

-

Le Temps d'une Fête - Rites et Mémoires

AccollaPatrickGeffroyYannickFête Patronale du village d'Utelle, filmée les 15,16 et 17 août par Patrick Accolla et Yannick Geffroy.

-

Dix ans de MERE 29 et Hommage aux républicains espagnols

López CabelloIvánSala-PalaJeanIII Colloque international " Républicain.e.s espagnol.e.s exilé.e.s pendant la Seconde Guerre mondiale : travail forcé et résistances. Rotspanier, 80 ans après ". Brest, 17-19 mars 2022. - Dix ans de

-

Projets éditoriaux - Républicain.e.s espagnol.e.s exilé.e.s pendant la Seconde Guerre mondiale

López CabelloIvánMartinez-MalerOdetteAdámez CastroGuadalupeNaharro-CalderónJosé MaríaIII Colloque international " Républicain•e•s espagnol•e•s exilé•e•s pendant la Seconde Guerre mondiale : travail forcé et résistances ". Brest, 17-19 mars 2022 - Projets éditoriaux (Brest, 18 mars

-

Rotspanier, 80 ans après (partie 1)

López CabelloIvánVigourouxHuguesAllende Santa CruzClaudineIII Colloque international " Républicain•es espagnol•es exilé•es pendant la Seconde Guerre mondiale : travail forcé et résistances. Rotspanier, 80 ans après ". Brest, 17-19 mars 2022. - Séance :

-

Chronotopes pestiférés ; entre barbelés et exils

López CabelloIvánNaharro-CalderónJosé MaríaIII Colloque international " Républicain•e•s espagnol•e•s exilé•e•s pendant la Seconde Guerre mondiale : travail forcé et résistances ". Brest, 17-19 mars 2022. - Conférence : José María Naharro

-

La caravana de la Memoria et les premiers réseaux associatifs en Bretagne. Une lutte mémorielle com…

López CabelloIvánCerveraAlfonsIII Colloque international « Républicain.e.s espagnol.e.s exilé.e.s pendant la Seconde Guerre mondiale : travail forcé et résistances. Rotspanier, 80 ans après ». Brest, 17-19 mars 2022. - Conférence

-

Mémoire de l’exil des républicain.es espagnol.es dans le littoral atlantique français pendant la…

López CabelloIvánVigourouxHuguesGarciaGabrielleCarrionArmelleLuisGarrido OrozcoFernandezCarlosRuizJoséIII Colloque international "Républicain.e.s espagnol.e.s exilé.e.s pendant la Seconde Guerre mondiale : travail forcé et résistances. Rotspanier, 80 ans après », Brest, 17-19 mars 2022.- Séance :

-

Bénéfices de l’activité physique et stratégies mnésiques au cours du vieillissement

MoutoussamyIlonaLe projet a donc pour objectif d’expliquer les bénéfices de l’activité physique sur la mémoire au cours du vieillissement par un maintien du fonctionnement exécutif permettant l’utilisation de

-

Discours d'anniversaire en mémoire d'Edouard Glissant

GlissantSylvieDiscours d'anniversaire en mémoire d'Edouard Glissant, à la Pointe Chéry, Diamant, Martinique.

-

Mémoire renversée. Parcours biographique de l'oeuvre de Séra

SéraCet entretien mené par Véronique Donnat explore les questions biographiques qui ont déterminé l’œuvre de Séra, peintre, plasticien, sculpteur et auteur de bandes dessinées.

-

Entretien avec Claudia Feld, chercheuse au CONICET

FeldClaudiaL’émergence ou l’affirmation de lieux emblématiques, sur lesquels reposent souvent les politiques publiques nationales, contribue à la visibilité des questions liées aux passés traumatiques, mais dans

-

Conférence de Claudia Feld chercheuse au CONICET (Argentine)

L’émergence ou l’affirmation de lieux emblématiques, sur lesquels reposent souvent les politiques publiques nationales, contribue à la visibilité des questions liées aux passés traumatiques, mais dans