Notice

Safety Verification of Deep Neural Networks

- document 1 document 2 document 3

- niveau 1 niveau 2 niveau 3

Descriptif

Deep neural networks have achieved impressive experimental results in image classification, but can surprisingly be unstable with respect to adversarial perturbations, that is, minimal changes to the input image that cause the network to misclassify it. With potential applications including perception modules and end-to-end controllers for self-driving cars, this raises concerns about their safety. This lecture will describe progress with developing a novel automated verification framework for deep neural networks to ensure safety of their classification decisions with respect to image manipulations, for example scratches or changes to camera angle or lighting conditions, that should not affect the classification. The techniques work directly with the network code and, in contrast to existing methods, can offer guarantees that adversarial examples are found if they exist. We implement the techniques using Z3 and evaluate them on state-of-the-art networks, including regularised and deep learning networks. We also compare against existing techniques to search for adversarial examples.

Intervention / Responsable scientifique

Thème

Sur le même thème

-

Le projet Affinity - Evaluer le comportement des personnes avec TSA en présence de leur affinité

ChérelMyriamBayou-OuttasMeriemFournierJulieBucherEmmaMannPaulineA travers cette série d'interviews, le LabEx vous invite à découvrir le projet Affinity qui s'intéresse à la relation entre les personnes atteintes d'un trouble du spectre de l'autisme et leurs

-

-

Knowledge transfer and human-machine collaboration for training object class detector

FerrariVittorioObject class detection is a central area of computer vision. It requires recognizing and localizing all objects of predefined set of classes in an image. Detectors are usually trained under

-

Visual Reconstruction and Image-Based Rendering

SzeliskiRichardThe reconstruction of 3D scenes and their appearance from imagery is one of the longest-standing problems in computer vision. Originally developed to support robotics and artificial intelligence

-

Du traitement numérique des images et de la vision par ordinateur à la neuro-imagerie : un voyage d…

DericheRachidRachid Deriche donne une rapide vue d'ensemble de ses travaux de recherche, depuis ses débuts, en traitement et analyse d'image, sur l'extraction de contours en particulier, puis en théorie et

-

Comment améliorer la qualité des images ?

Blanc-FéraudLaureOn s'interesse ici à des détails inaccessibles dans l'image, parce que le détecteur est trop loin de la scène que l'on cherche à imager, comme par exemple en imagerie satellitaire pour l'observation

-

Le débruitage d'images à travers les âges (A perspective on image denoising)

MorelJean-MichelLe débruitage de l'image est un problème qui a fait couler beaucoup d'encre, un problème a priori presque impossible. Jean-Michel Morel présente les quelques étapes et formules clés qui permettent de

-

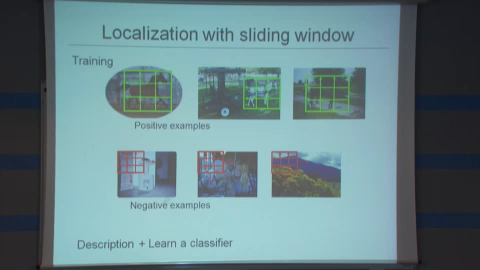

Interprétation de contenus d'images (Visual object recognition) : 1ère partie

SchmidCordeliaDans la première partie de ce cours nous introduisons des descripteurs d'image robustes et invariants ainsi que leur application à la recherche d’images similaires. Nous expliquons ensuite comment

-

Interprétation de contenus d'images (Visual object recognition) : 2eme partie

SchmidCordeliaDans la première partie de ce cours nous introduisons des descripteurs d'image robustes et invariants ainsi que leur application à la recherche d’images similaires. Nous expliquons ensuite comment

-

Action recognition from video: some recent results

SchmidCordeliaWhile recognition in still images has received a lot of attention over the past years, recognition in videos is just emerging. In this talk I will present some recent results. Bags of features have

-

Histoire d'ISTAR ou SPOT en relief

Un logiciel de mise en relief des images de la Terre prises par satellite est à l'origine de la création d'Istar, une jeune société française fondée par des chercheurs de l'Institut de Géodynamique du